We are working on the paper on Talk Aloud protocol in survey piloting! Come back soon to see updates!

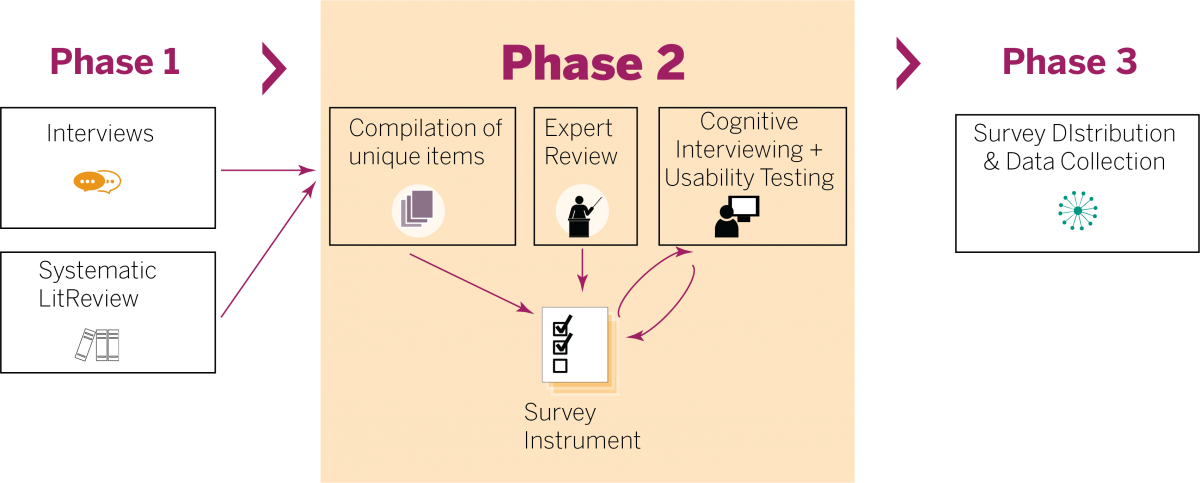

For our survey pilot test for instructional designers and educational technologists mixed methods study (learn more about the study), we decided to use a more agile and iterative model to allow us to collect data from a relatively limited number of participants, by quickly iterating on the questionnaire design as soon as challenges and recommendations were provided experienced by respondents.

We conducted our think-aloud testing over WebEx teleconferencing software. Since participants shared their screen interviewers could observe participants’ mouse movements. This allowed interviewers to notice hesitations, movements such as scrolling up and down or navigating back and forth between pages, or changes in answers. Where necessary, participants were prompted to explain these actions, which frequently allowed us not only to identify unclear or challenging instructions, question stems, or response options, but to understand why participants struggled with these.

Additionally, we asked questions at key points to allow us to understand whether participants were consistent in their interpretations. For example, we asked each participant to explain their thought process in determining which point to choose on a Likert-type scale. After they had completed the survey, we asked questions to gain an understanding of their overall impressions of the survey, their opinion about the incentive offered, and their likelihood of participating if they had received an invitation. It took 50-90 minutes to complete the protocol for our 25-minute survey. At least two members of our team conducted each test, with one member leading the test and one or more taking detailed notes. We also used WebEx’s inbuilt capability to record each session, allowing for potential future review.

We tested the survey with a total of 12 participants across several user groups. Our initial questionnaire was tested on three participants, after which we discussed our findings and made major revisions. We then proceeded to conduct tests with members of different user groups, debriefing and making small changes after each 1-2 tests. Beyond the second round of changes, the majority of issues identified were based on varied interpretations of individual items by participants with different backgrounds. It was difficult to determine a “stopping point” as continued to learn more and make additional changes after each round. However, we finally stopped after we had tested with 2-3 participants from each user group and no longer identified any additional major changes